In the last two articles on Spock I've covered

mocking and

stubbing. And I was pretty sold on Spock just based on that. But for a database driver, there's a killer feature:

Data Driven Testing.

All developers have a tendency to think of and test the happy path. Not least of all because that's usually the path in the User Story - "As a customer I want to withdraw money and have the correct amount in my hand". We tend not to ask "what happens if they ask to withdraw money when the cash machine has no cash?" or "what happens when their account balance is zero?".

With any luck you'll have a test suite covering your happy paths, and probably at least twice as many grumpy paths. If you're like me, and you like one test to test one thing (and who doesn't?), sometimes your test classes can get quite long as you test various edge cases. Or, much worse (and I've done this too) you use a calculation remarkably like the one you're testing to generate test data. You run your test in a loop with the calculation and lo! The test passes. Woohoo?

Not that long ago I went through a process of re-writing a lot of unit tests that I had written a year or two before - we were about to do a big refactor of the code that generated some important numbers, and we wanted our tests to tell us we hadn't broken anything with the refactor. The only problem was, the tests used a calculation rather similar to the production calculation, and borrowed some constants to create the expected number. I ended up running the tests to find the numbers the test was generating as expected values, and hardcoding those values into the test. It felt dirty, but it was necessary - I wanted to make sure the refactoring didn't change the expected numbers as well as the ones generated by the real code. This is not a process I want to go through ever again.

When you're testing these sorts of things, you try and think of a few representative cases, code them into your tests, and hope that you've covered the main areas. What would be far nicer is if you could shove a whole load of different data into your system-under-test and make sure the results look sane.

An example from the Java driver is that we had tests that were checking the parsing of the URI - you can initialise your MongoDB settings simply using a String containing the URI.

The old tests looked like:

(See

MongoClientURITest)

Using Spock's data driven testing, we changed this to:

(See

MongoClientURISpecification)

Instead of having a separate test for every type of URL that needs parsing, you have a single test and each line in the

where: section is a new combination of input URL and expected outputs. Each one of those lines used to be a test. In fact, some of them probably weren't tests as the ugliness and overhead of adding another copy-paste test seemed like overkill. But here, in Spock, it's just a case of adding one more line with a new input and set of outputs.

The major benefit here, to me, is that it's dead easy to add another test for a "what if?" that occurs to the developer. You don't have to have yet another test method that someone else is going to wonder "what the hell are we testing this for?". You just add another line which documents another set of expected outputs given the new input.

It's easy, it's neat, it's succinct.

One of the major benefits of this to our team is that we don't argue any more about whether a single test is testing too much. In the past, we had tests like:

And I can see why we have all those assertions in the same test, because technically these are all the same concept - make sure that each type of WriteConcern creates the correct command document. I believe these should be one test per line - because each line in the test is testing a different input and output, and I would want to document that in the test name ("fsync write concern should have fsync flag in getLastError command", "journalled write concern should set j flag to true in getLastError command" etc). Also don't forget that in JUnit, if the first assert fails, the rest of the test is not run. Therefore you have no idea if this is a failure that affects all write concerns, or just the first one. You lose the coverage provided by the later asserts.

But the argument against my viewpoint is then we'd have seven different one-line tests. What a waste of space.

You could argue for days about the best way to do it, or that this test is a sign of some other smell that needs addressing. But if you're in a real world project and your aim is to both improve your test coverage and improve the tests themselves, these arguments are getting in the way of progress. The nice thing about Spock is that you can take these tests that test too much, and turn them into something a bit prettier:

You might be thinking, what's the advantage over the JUnit way? Isn't that the same thing but Groovier? But there's one important difference - all the lines under

where: get run, regardless of whether the test before it passes or fails. This basically is seven different tests, but takes up the same space as one.

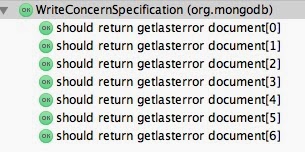

That's great, but if just one of these lines fails, how do you know which one it was if all seven tests are masquerading as one? That's where the awesome

@Unroll annotation comes in. This reports the passing or failing of each line as if it were a separate test. By default, when you run an unrolled test it will get reported as something like:

But in the test above we put some magic keywords into the test name:

'#wc should return getlasterror document #commandDocument' - note that these values with

# in front are the same headings from the

where: section. They'll get replaced by the value being run in the current test:

Yeah, it can be a bit of a mouthful if the

toString is hefty, but it does give you an idea of what was being tested, and it's prettier if the inputs have nice succinct string values:

This, combined with Spock's awesome

power assert makes it dead simple to see what went wrong when one of these tests fails. Let's take the example of (somehow) the incorrect host being returned for one of the input URIs:

Data driven testing might lead one to over-test the simple things, but the cost of adding another "what if?" is so low - just another line - and the additional safety you get from trying a different input is rather nice. We've been using them for parsers and simple generators, where you want to throw in a bunch of inputs to a single method and see what you get out.

I'm totally sold on this feature, particularly for our type of application (the Java driver does a lot of taking stuff in one shape and turning it into something else). Just in case you want a final example, here's a final one.

The old way:

...and in Spock:

Thanks for the example! It is always nice to see more examples of how to specify the data-driven tests, specifically real-world tests rather than the simple examples...

ReplyDeleteThanks! There are more examples in the code in GitHub. I know we can do better (and we still have tests I'd like to convert) but it is nice to be able to provide real examples in the wild.

DeleteDear Trisha

DeleteCan you please tell which are the good source to learn spock.I will be very Thankful if you email me or write in the comment section.

I've put some links on this page: http://trishagee.github.io/presentation/groovy_vs_java/

DeleteBrilliant article :)

ReplyDeleteDear Trisha

ReplyDeleteCan you please tell me how I can call a method with parameters when inside a method in spock.

And how i can return value from a method in spoock.

You write Spock tests in Groovy, so it sounds like you need to do some Groovy tutorials:

Deletehttp://www.groovy-lang.org/learn.html