This is the missing piece in the end-to-end view of the Disruptor. Brace yourselves, it's quite long. But I decided to keep it in a single blog so you could have the context in one place.

The important areas are: not wrapping the ring; informing the consumers; batching for producers; and how multiple producers work.

ProducerBarriers

The

Disruptor code has interfaces and helper classes for the

Consumers, but there's no interface for your producer, the thing that writes to the ring buffer. That's because nothing else needs to access your producer, only you need to know about it. However, like the consuming side, a

ProducerBarrier is created by the ring buffer and your producer will use this to write to it.

Writing to the ring buffer involves a two-phase commit. First, your producer has to claim the next slot on the buffer. Then, when the producer has finished writing to the slot, it will call

commit on the

ProducerBarrier.

So let's look at the first bit. It sounds easy - "get me the next slot on the ring buffer". Well, from your producer's point of view it is easy. You simply call

nextEntry() on the

ProducerBarrier. This will return you an

Entry object which is basically the next slot in the ring buffer.

The ProducerBarrier makes sure the ring buffer doesn't wrap

Under the covers, the

ProducerBarrier is doing all the negotiation to figure out what the next slot is, and if you're allowed to write to it yet.

(I'm not convinced the

shiny new graphics tablet

is helping the clarity of my pictures, but it's fun to use).

For this illustration, we're going to assume there's only one producer writing to the ring buffer. We will deal with the intricacies of multiple producers later.

The

ConsumerTrackingProducerBarrier has a list of all the

Consumers that are accessing the ring buffer. Now to me this seemed a bit odd - I wouldn't expect the

ProducerBarrier to know anything about the consuming side. But wait, there is a reason. Because we don't want the "conflation of concerns" a queue has (it has to track the head and tail which are sometimes the same point), our consumers are responsible for knowing which sequence number they're up to, not the ring buffer. So, if we want to make sure we don't wrap the buffer, we need to check where the consumers have got to.

In the diagram above, one

Consumer is happily at the same point as the highest sequence number (12, highlighted in red/pink). The second

Consumer is a bit behind - maybe it's doing I/O operations or something - and it's at sequence number 3. Therefore consumer 2 has the whole length of the buffer to go before it catches up with consumer 1.

The producer wants to write to the slot on the ring buffer currently occupied by sequence 3, because this slot is the one after the current ring buffer cursor. But the

ProducerBarrier knows it can't write here because a

Consumer is using it. So the

ProducerBarrier sits and spins, waiting, until the consumers move on.

Claiming the next slot

Now imagine consumer 2 has finished that batch of entries, and moves its sequence number on. Maybe it got as far as sequence 9 (in real life I expect it will make it as far as 12 because of the way consumer batching works, but that doesn't make the example as interesting).

The diagram above shows what happens when consumer 2 updates to sequence number 9. I've slimmed down the

ConsumerBarrier in this picture because it takes no active part in this scene.

The

ProducerBarrier sees that the next slot, the one that had sequence number 3, is now available. It grabs the

Entry that sits in this slot (I've not talked specifically about the

Entry class, but it's basically a bucket for stuff you want to put into the ring buffer slot which has a sequence number), sets the sequence number on the

Entry to the next sequence number (13) and returns this entry to your producer. The producer can then write whatever value it wants into this

Entry.

Committing the new value

The second phase of the two-stage commit is, well, the commit.

The green represents our newly updated

Entry with sequence 13 - yeah, I'm sorry, I'm red-green colour-blind too. But other colours were even more rubbish.

When the producer has finished writing stuff into the entry it tells the

ProducerBarrier to commit it.

The

ProducerBarrier waits for the ring buffer cursor to catch up to where we are (for a single producer this will always be a bit pointless - e.g. we know the cursor is already at 12, nothing else is writing to the ring buffer). Then the

ProducerBarrier updates the ring buffer cursor to the sequence number on the updated

Entry - 13 in our case. Next, the

ProducerBarrier lets the consumers know there's something new in the buffer. It does this by poking the

WaitStrategy on the

ConsumerBarrier - "Oi, wake up! Something happened!" (note - different

WaitStrategy implementations deal with this in different ways, depending upon whether it's blocking or not).

Now consumer 1 can get entry 13, consumer 2 can get everything up to and including 13, and they all live happily ever after.

ProducerBarrier batching

Interestingly the disruptor can batch on the producer side as well as

on the Consumer side. Remember when consumer 2 finally got with the programme and found itself at sequence 9? There is a very cunning thing the

ProducerBarrier can do here - it knows the size of the buffer, and it knows where the slowest

Consumer is. So it can figure out which slots are now available.

If the

ProducerBarrier knows the ring buffer cursor is at 12, and the slowest

Consumer is at 9, it can let producers write to slots 3, 4, 5, 6, 7 and 8 before it needs to check where the consumers are.

Multiple producers

You thought I was done, but there's more.

I slightly lied in some of the above drawings. I implied that the sequence number the

ProducerBarrier deals with comes directly from the ring buffer's cursor. However, if you look at the code you'll see that it uses the

ClaimStrategy to get this. I skipped this to simplify the diagrams, it's not so important in the single-producer case.

With multiple producers, you need yet another thing tracking a sequence number. This is the sequence that is available for writing to. Note that this is not the same as ring-buffer-cursor-plus-one - if you have more than one producer writing to the buffer, it's possible there are entries in the process of being written that haven't been committed yet.

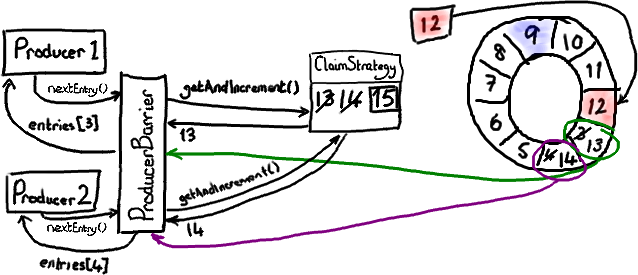

Let's revisit claiming a slot. Each producer asks the

ClaimStrategy for the next available slot. Producer 1 gets sequence 13, like in the single producer case above. Producer 2 gets sequence 14, even though the ring buffer cursor is still only pointing to 12, because the

ClaimSequence is dishing out the numbers and has been keeping track of what's been allocated.

So each producer has its own slot with a shiny new sequence number.

I'm going colour producer 1 and its slot in green, and producer 2 and its slot in a suspiciously pink-looking purple.

Now imaging producer 1 is away with the fairies, and hasn't got around to committing for whatever reason. Producer 2 is ready to commit, and asks the ProducerBarrier to do so.

As we saw in the earlier commit diagram, the ProducerBarrier is only going to commit when the ring buffer cursor reaches the slot behind the one it wants to commit into. In this case, the cursor needs to reach 13 so that we can commit 14. But we can't, because producer 1 is staring at something shiny and hasn't committed yet. So the ClaimStrategy sits there spinning until the ring buffer cursor gets to where it should be.

Now producer 1 wakes up from its coma and asks to commit entry 13 (green arrows are sparked by the request from producer 1). The

ProducerBarrier tells the

ClaimStrategy to wait for the ring buffer cursor to get to 12, which it already had of course. So the ring buffer cursor is incremented to 13, and the

ProducerBarrier pokes the

WaitStrategy to let everything know the ring buffer was updated. Now the

ProducerBarrier can finish the request from producer 2, increment the ring buffer cursor to 14, and let everyone know that we're done.

You'll see that the ring buffer retains the ordering implied by the order of the initial

nextEntry() calls, even if the producers finish writing at different times. It also means that if a producer is causing a pause in writing to the ring buffer, when it unblocks any other pending commits can happen immediately.

Phew. And I managed to describe all that without mentioning a memory barrier once.

EDIT: The most recent version of the

RingBuffer hides away the Producer Barrier. If you can't see a

ProducerBarrier in the code you're looking at, then assume where I say "producer barrier" I mean "ring buffer"

EDIT 2: Note that version 2.0 of the Disruptor uses different names to the ones in this article. Please see

my summary of the changes if you are confused about class names.

What happens if, in the two producer scenario, producer 1 fails to commit to the buffer? Is producer 2 blocked from ever completing the commit? Does the ring buffer suffer an effective deadlock at that point?

ReplyDeleteThe producers need to manage this scenario carefully, as they do need to be aware that they are blocking other producers.

ReplyDeleteIf one of the producers fails to commit because the producer is broken, your whole system has bigger problems than deadlock. If, however, it fails to commit because the transaction it was trying to complete failed, two options spring to mind: 1) retry until the commit is successful (the other producer will block until success) or 2) commit a "dead message" to the ring buffer so that the blocked producers can continue, and the consumers will ignore the dead message while still allowing the sequence numbers to increment in the expected fashion.

3). Which is similar to 2), timeout the "failed" commit and let client decide it on business level.

DeleteThank you for the quick response. One other question on the disruptor: do you have strategies for handling either slow consumers or slow producers (in the multi-producer scenario)?

ReplyDeleteOn a side note, it would be great to hear about other architectural aspects of LMAX such as your approach to high availability, data persistence, work distribution among multiple processing units, etc.

Martin Fowler's article gives a bigger context of the LMAX architecture, but we're not going to give away all our secrets yet ;)

ReplyDeletehttp://martinfowler.com/articles/lmax.html

If your consumers are consistently slower than your producers, your system is always going to back up regardless of your architecture. One of the ways you might handle this is to have two consumers doing the same thing, but one processes even sequence numbers and one processes odd ones. That way you could potentially process more in parallel (of course you can expand beyond two).

If your producers are slow, then it might point to your design doing too many things in one place. Our producers are usually simple things - they take data from one place and stick it in the ring buffer. If your producers are doing a lot of processing, you could think about moving that logic into an early consumer. Your producer could write the raw data into the ring buffer, the new consumer could read the raw data off the ring buffer, process it, write the processed data into the Entry, and have all downstream consumers dependent upon this consumer. That might suggest ways you can parallelise this work.

Since the producer is the one that knows whether the buffer is full, and since the producer is managing writing to it, it should be easy - implementing the producer is totally up to you, so you can decide exactly how to deal with the case when the ring buffer is full

ReplyDeleteIf I understand you correctly, what you're saying is that if you had a consumer which was doing asynchronous logging, but it was logging much slower than the producer was producing, the producer would end up blocking, waiting for the logger to finish before it can publish to the ring buffer?

ReplyDeleteYes, that's true. If you have any consumer which runs slower than the producer, eventually everything will be hanging around waiting for that consumer. If you don't want to block the ring buffer, I guess there's a few things you could try - have your consumer representing the async logger punt the data off to somewhere else to deal with it (maybe a second disruptor with a *massive* ring buffer, or some sort of logging service that won't block this consumer), or parallelise the logging, so one consumer logs the odds, the other the evens (or mod 4, or whatever). Then you just need to write a simple mechanism to weave the logs back together when/if you need to read them (obviously you want your different loggers logging to different files to avoid contention).

Brilliant article, you've explained the concepts brilliantly. We're thinking of including Disruptor in our next project.

ReplyDelete@Shane Awesome, let me know how it goes!

ReplyDeleteHi,

ReplyDeleteI am new for Disruptor framework, i have scenario where i need 100 producers and 200 consumers, in this case can you use Disruptor framework.

Hi siky,

ReplyDeleteSure, there's nothing to stop you doing that. But you're going to want to check your "wait" settings to make sure you've got the best configuration. To get the most speed out of the Disruptor though you want a CPU core per producer/consumer, if that's what you're after you're going to have to shard your problem more carefully so you can split it over multiple machines.

Hi Trisha,

ReplyDeletethank you for the great article.

assuming that i transfer messages between two different modules.

currently i use JMS, i have a producer that sends messages to a queue and consumers that pull them off the queue.

does the Distruptor ment to be a replacement for that configuration? for what type of architecture would you find it suit?

thanks.

In this case you'd use the Disruptor simply to replace the queue part. We use Informatica Ultra Messaging to transport messages, and the Disruptor in place of the queue and consumers that would traditionally be used.

DeleteHi Trisha,

ReplyDeleteThis is really a great way of explaining this pattern.I appreciate this.I got a feel of what is Disruptor pattern and what it tries to do.I have not gone through the source code nor used it any projects.So I have couple of questions here before using it.

Is the entire Disruptor pattern has been implemented using array?

In the case of sequencing, why always keep incrementing number? So what it happens to the array index?

Apart from traditional circular rings, here you are updating the slots, not inserting a new one with the message.In that case, how the buffer overflow will happen? because you are never going to increase the initial capacity of the RingBuffer, if I understand it correctly.

Can we have our own implementation of ProducerBarrier and plug-in to this framework?

No, only the RingBuffer is an array. And you don't need to use the Disruptor's RingBuffer, you can replace it with your own data structure if you need. The ProducerBarrier no longer exists as a separate entity in Disruptor 2.0, all that functionality is folded into the RingBuffer.

DeleteThe sequence number always increases so that every consumer can track its own sequence numbers, the array index is calculated using a mod operation (see http://mechanitis.blogspot.co.uk/2011/06/dissecting-disruptor-whats-so-special.html).

I'm not sure what you mean by buffer overflow. What can happen is that as you are continually writing to the ring buffer, you're over-writing old data in old slots, so you need to make sure that you don't require that data before you overwrite it.

Thanks for the reply.I understood it better now.What is I mistakenly understood that the sequence number was actually mapping to array index.It actually uses a mod operation.

DeleteI have one more clarification here.When the producer is finds a particular slot is not free for write operation, then how long it will wait? In this case is it a I/O blocking operation?

Depends on the ClaimStrategy - you can plug in different ones, and it will depend upon your application and what you want to do. By default, your producer will wait forever. But if your RingBuffer is full and the consumers aren't reading from it, then it's Game Over anyway.

DeleteAre there any case will happen where in the RingBuffer is full ? why because we are updating it, right? So every time we update the slots with the data and the producer is updating the sequence number.So in which case this behavior happens?

DeleteYes, the RingBuffer can fill up, if the consumers aren't reading as fast as the producer is writing into it. If the producer is running faster than the consumers, it will have to wait for a free slot and apply back-pressure throughout the system - when it starts waiting for a slot, systems that are upstream from the producer will need to slow down as well.

DeleteIf you think this is something that might happen in your system, you need to monitor for this behaviour so you can identify the problem. If this happens regularly you have a more serious problem with your application - the RingBuffer is there to deal with bursts of traffic, it should fill and drain as the consumers catch up.

I don't understand for what you need the cursor? As you anyway have a sequence number in each Entry, you could set the initial value of the sequence number to 0x8000000000000000L (if the ring buffer is created) and then each producer must update the sequence number of an allocated entry after it is done with producing, so that the consumers just need to check for a valid sequence number instead to wait for the cursor. This allows the consumer to skip a not yet ready entry and to get back to it later, so that the consumer can do some useful work while a producer is creating an entry. Especially helpful if you have multiple producers and consumers.

ReplyDeleteSo an entry is valid, if its sequence number is less/equal next minus ring-buffer size (isValid = entry.seqNumber >= (ringbuffer.next - ringbuffer.length)).

Additionally you don't need the CAS operation to the cursor anymore.

Sequencing and the CAS operation for the cursor are the fundamental basis of the Disruptor. The whole point is to process the events in order (i.e. not to skip an event that isn't ready). The use-case above sounds totally viable but not at all suitable for a Disruptor-based architecture.

DeleteFor more detail as to why the Sequence number is important, take a look at my most recent presentation on a use-case for the Disruptor: http://mechanitis.blogspot.co.uk/2012/03/new-disruptor-presentation-unveiled-to.html

You wrote "In this case, the cursor needs to reach 13 so that we can commit 14." and what I am trying to tell you is that you can fix this case. Basically with my suggestion above you can commit 14 before 13 and consumers can consume 14 before 13, but anyway you won't miss 13. This is no must, so while one consumer could still process all requests in their correct orders (11,12,13...) others could process them in "random" order and producers are not forced to wait with their commits. For sure depends at the situation, but you avoid that the performance of the whole processing goes down to the slowest producer.

Deletemy 2 cent

You could totally do that, if you want, but sequencing is fundamental to the design of the Disruptor. It was actually created to support reliable messaging, so having messages processed in the order they arrive is one of the reasons it was designed this way.

DeleteIf you prefer an architecture where ordering isn't important, then the Disruptor might not be the right choice for you.

Maybe I'am wrong, but I think you try to maintain an order that was never there. It's like in quantum mechanics, if you think you have an order in a highly volatile environment, you are sitting up an illusion. Look at a hashmap, you think it has a state in a highly concurrent environment? It doesn't, the moment you check if it contains a key it might, but the key may have been deleted before the moment you receive the information that there is such an key, so the moment the method "containsKey" tells you true, this information may already been outdated. The information moves slower as the state of the hashmap changes.

DeleteIn an network environment you are not in control of the order. What is the order? The moment the user pushs the "buy" button? But from there on there is a lot of noise, like the TCP/IP stack at the source machine, the TCP/IP stack at each node in betweeen the source (and all the threads running in parallel in between) and the TCP stack at the destination machine. Look at all the tiny details if two users are pushing the "buy" button in the same nanosecond, anyway you can never say which one will arive first. Maybe there is an error while transmitting the TCP package and it is re-transmitted or the kernel of one machine had to execute an MP3 player before he could process the TCP package and therefore now this package is a couple of microseconds slower, so what is about the order now?

I think we should think about complex computer system like in quantum mechanics. You are no longer in control of the things and any kind of order is just an illusion, therefore we should give this up, there is no state, no order. As more changes per second are done in an system as clearer it gets that there is no real state and no such thing like an order. In other words: The information moves always slower then the state of the systems changes.

That doesn't mean that you can't have finally an order, so at the end, in the macro view, yes, there is an order. The result must be ordered and the user must have the feeling that there is an order, but looking closer at it there is no need for order. All the system must ensure is that finally an order is the result, but it is impossible to really create an order in a high performance environment.

However, this is just my opinion.

You're correct in a lot of what you say, but there are places you can ensure ordering, and we use the Disruptor to do this. For example, between services inside our application we want to ensure ordering of messages (in fact, that's exactly why we introduced the sequence number on the Disruptor). We implemented reliable messaging using this sequence number, so that if one service received messages 3, 4, and 7, it can NAK for messages 5 and 6 - this way you can ensure ordering and you can ensure you haven't missed any messages. It's actually a core part of what we do - reliably messaging and consistent ordering.

DeleteWe don't apply this all the way out to the customer of course because the internet itself is not exactly reliable. So, while you are correct under many circumstances, you can set up your architecture precisely to ensure sequencing, which is exactly what we do. Martin Fowler's article about our architecture is probably the best place to get a feel for this: http://martinfowler.com/articles/lmax.html

Hi Trisha,

ReplyDeleteI am new to Disruptor. This article is really helpful for beginners. I have the following two queries:

Q1. I got a code example of one producer to one consumer(http://www.kotancode.com/2012/01/06/hello-disruptor/) and

one producer to multiple dependent consumers(http://mechanitis.blogspot.in/2011/07/dissecting-disruptor-wiring-up.html),

I like to get a code sample for multiple producers to multiple consumers or a Sequencer: 3P – 1C. Can you please refer to me any blogs or code sample?

Q2. This is a generic question regarding how multiple producer works.

Is Disruptor can use in a environment where a single file/variable is getting updated by multiple producers. For example, there are two producers (P1,P2), Which are updating a single shared variable (named as "count").

Initially the “count” value is 0.

Producer P1 will add 1 with the "count" current value. So after producer P1 processed, the value of count will be (0+1) = 1.

Producer P2 will add 2 with the "count" current value, So after producer P2 processed, the value of count will be (1+2) = 3.

Basically, P2 needs to read the updated "count" value (done by P1) and add the incremented value(2).

How we can maintain the order of execution of producers?(P2 will execute always after P1 execution.)

At the consumer side, consumers (C1,C2) will read the "count" value as sequentially(1,3,.,.,.). This is ok, as in ring buffer, each consumer will read the ring buffer value in sequential order only.

Thanks,

Prasenjit.

Hi Prasenjit,

DeleteThe code examples I usually work with are the performance tests which are included in the Disruptor source download. I'm working on some example code at the moment but I'm not going to do a multiple-producer version because my usual answer to the multiple-producers question is "don't do it" - there is almost always a way to split your architecture so you don't need it. You want a single producing placing things as fast as possible onto the ring buffer, and then all the logic should happen in an event handler.

For specific questions as to how the Disruptor works, the best place to look is the Google Group - https://groups.google.com/forum/?#!forum/lmax-disruptor. There are a lot of very switched-on people in the group, many of whom have used the Disruptor in anger in a lot of different ways. You might find your question has already been answered there, if not, if you ask it there then someone will be happy to help you. As you can see by the amount of time it has taken me to respond to this, my blog is not the best place to get answers!

The short answer to your question is, you should not have two things writing to a shared variable. This is exactly the sort of thing that will lead to contention, and the Disruptor is not going to help you in this case. Also producers should not be updating anything, their job is only to populate the event in the ring buffer with the data it needs. If you're manipulating that data (e.g. incrementing a count) then that's a responsibility for an event handler.

It sounds to me like this count variable is not quite in the correct place. Without more detailed information as to what you're trying to achieve, however, I can't give you any more guidance. I suggest you contact the google group with a more detailed example of what you're trying to do.

Trisha

Just managed to wrap my head around these lil rings...

ReplyDeleteof what I understand; the ring buffers are perhaps to serve multiple thread consumer scenarios for IPC (Inter process communication),.... but any chance the Rings can be vary large to serve and replace JMS implementations in the future?

Conceptually can these Ring Buffers can proxy traditional JMS Queues in behavior with added advantages of being able to fetch messages in Batch also?

Also, I'm told that the ideal ring size depends on the amount of CPU power available. Cant locate the blog for that... would like to be able to compute that.

Curious.

tx

Sizing of the ring buffer is totally down to the sort of performance you want. You can do calculations to work out (approximately) what these should be, but fundamentally you want tests to check this hypothesis, certainly if performance is important to you.

DeleteAt LMAX, our rings are very large, so provided you have enough memory there is no problem with this. Personally I'm not sure what's involved with replacing JMS implementations with the Disruptor, but I bet there are people out there trying it right now. The best place to ask this question is probably the Google group: http://groups.google.com/group/lmax-disruptor

Hi Trisha,

ReplyDeleteIs there any place where all the WaitStrategy and ClaimStrategy have been defined in detail and how one can identify the right strategy?

Also if my system is processing 10000 ticks per second then what should be the ideal size of my ring buffer?

The source code for each strategy has a comment at the top explaining when to use them. As for the ideal size of your ring buffer, that depends on a number of variables including memory available. Best thing to do is to write some tests that simulate your system and find out what sort of performance you get.

DeleteTake a look at the Google Group too, there's a whole history of questions and answers on there which are dead useful.

This comment has been removed by the author.

ReplyDeleteHi Trisha,

ReplyDeleteI was wondering...for a web server dealing with multiple http-threads should we use multiple producers ? And if yes should we use 1 producer per http-thread ?

By the way... Thank you for your article it is an awesome work :) !

Hi - depends on your use-case, but if you are going to use multiple producers you should look at Disruptor 3.0, which had seriously improved the multi-producer use-case. Try asking on the google group for more specific info if you need it - the guys there are much more up to date than I am!

DeleteHi, Do we have benchmarks in which we have very high number of consumers (1P - 200C) ?

ReplyDeleteAt LMAX we tended to allocate a CPU core to a consumer, so we would not have tried out that many consumers. Someone else might have done this benchmark, although I'm not aware of it.

DeleteHi,

ReplyDeleteIf consumer's event process speed is always slower than publisher, how can it process?

ring size is 2048, publishing speed is 2048/s, event processing is 1024/s,

Your consumers MUST keep up with, or ideally out-pace, your producers. Your producer will be blocking when the queue is full, to add backpressure, so if your consumers are slower than your producers you will have a bottleneck in your system. Consider splitting up the work the consumers do so you can have more of them taking on different responsibilities, or looking at something like a WorkerPool[1] to have multiple consumers that perform the same operations.

Delete[1] https://github.com/LMAX-Exchange/disruptor/blob/master/src/main/java/com/lmax/disruptor/WorkerPool.java

Can a large ring buffer cause problems like dirtying many cache lines, thrashing, etc..? I.e should the ring buffer be as small as possible?

ReplyDeleteRing buffers that are too large or too small both have performance problems, so you need to size it "just right". This will depend upon your hardware and your application, so the best way to work it out is to do some calculations and run some tests. There'll be info in the google group about other people who have gone through this exercise: https://groups.google.com/forum/#!forum/lmax-disruptor

DeleteHi Trisha, i have read your article above and i want to ask :

ReplyDeleteI have a listener (with threadpool), which listen to a queue and for each message i put it into ringbuffer, which has a handler with multiple consumer;

Therefore, i conclude that i have to scale the threadpool of Listener, Handler, and RIng Buffer's size;

Am i right?

Now, if however, the producer is faster than consumer, you said there will be blocking operations, does it mean the producer thread will just waiting around until there is a free slot? If yes, it means bad for listener threadpool (in my case) right?

But i looked at the code and the tryNext() method can throw insufficient exception (or something like that :D), so how exactly i should deal with this case? If i should catch the exception, what should i do with the event it hold?

Thank you very much

If the producer is faster than the consumer you will always run into problems, whether you're using the Disruptor or a more tradition queue model. In an unbounded queue, it will just expand forever until you run out of memory. In the Disruptor, the producer does have to wait until there's a free slot before publishing. It's standard in these circumstances to apply backpressure

DeleteI'm not familiar with the insufficient exception, the code has moved on a lot since I last looked at it. For more detailed information, your best bet is to mail the google group: https://groups.google.com/forum/?#!forum/lmax-disruptor